About Cayman National

Established in the Isle of Man, since 1985 Cayman National offers a range of local private client wealth management services encompassing Banking, Fiduciary and Fund Custody Services. It forms part of the Cayman National Corporation group of companies, with offices located in the Isle of Man, Cayman Islands and Dubai.

Cayman National Corporation was set up in 1974 and has provided its customers with a comprehensive range of domestic and international financial services. Its portfolio of clients range from wealthy individuals and their families through to entrepreneurs, smaller companies, investment business, financial intermediaries and charitable concerns.

About 2019 leak

In 2019, popular hacktivist Phineas Fisher released about 2 terabytes of data stolen from Cayman National. That leak included, amongst other files, "October 2019" directory with 11 7-Zip archives, containing 25 virtual drive image files in Hyper-V format (VHD and VHDX).

We decided to prepare a case study based on these files, since they are real company data, not just some better or worse synthetic benchmark - and, at the same time, these files are publicly available, so anyone can download them and verify all results presented below.

Overview of the files

From 25 virtual drive image files unpacked from "October 2019" directory:

- 21 files are complete and readable

- 3 files are broken to the point, where nothing can be done with them during exfiltration, except of making compressed raw copy for later manual recovery

- 1 file is broken in some special way (this will be further discussed below)

Let's take a closed look at the files. Each image has from 1 to 3 NTFS partitions inside - always exactly 1 "main" partition, and possibly some additional boot partitions. In the below table, we just added up files and megabytes from boot partitions into main ones.

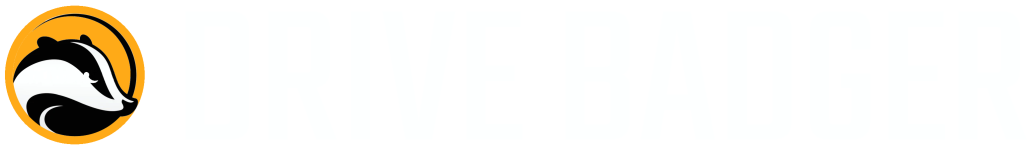

| image size [MB] | total space usage [MB] | total space usage [%] | total files | |

|---|---|---|---|---|

| Athol_-_File_Application_Server/Virtual Hard Disks/Athol_C.vhd | 71 680 | 61 522 | 85,83 | 301 381 |

| Athol_-_File_Application_Server/Virtual Hard Disks/Athol_S.vhd | 430 080 | 345 568 | 80,35 | 405 639 |

| CN-AMLT/CN-AMLT_C.vhdx | 51 204 | 24 351 | 47,56 | 167 501 |

| CN-FS01/CN-FS01_C.vhdx | 71 684 | 26 569 | 37,06 | 204 568 |

| CN-PAMLT/CN-PAMLT_C.vhdx | 51 204 | 26 015 | 50,81 | 169 695 |

| CN-ProBank/CN-ProBanx_C.vhdx | 102 404 | 98 662 | 96,35 | 317 480 |

| CN-WEB/CN-WEB_C.vhdx | 51 204 | 12 996 | 25,38 | 137 013 |

| Hyper-V/Virtual Hard Disks/CaymanUAT_C.vhd | 184 320 | 118 982 | 64,55 | 200 817 |

| Hyper-V/Virtual Hard Disks/CN-AMLTS_C.vhdx | 92 164 | 81 938 | 88,9 | 188 364 |

| Hyper-V/Virtual Hard Disks/CN-BPM_C.vhdx | 40 964 | 38 462 | 93,89 | 239 471 |

| Hyper-V/Virtual Hard Disks/CN-Intranet_C.vhdx | 40 964 | 25 609 | 62,52 | 224 476 |

| Hyper-V/Virtual Hard Disks/CN-LFR_C.vhdx | 61 444 | 45 628 | 74,26 | 200 967 |

| Hyper-V/Virtual Hard Disks/CN-LFR_E.vhdx | 409 604 | 291 757 | 71,23 | 6 467 670 |

| Hyper-V/Virtual Hard Disks/CN-LFR_F.vhdx | 204 804 | 90 480 | 44,18 | 2 206 860 |

| Hyper-V/Virtual Hard Disks/CN-LFRT_C.vhdx | 61 444 | 20 720 | 33,72 | 161 189 |

| Hyper-V/Virtual Hard Disks/CN-SQL_C.vhdx | 61 444 | 20 295 | 33,03 | 119 963 |

| Hyper-V/Virtual Hard Disks/CN-SQL_E.vhdx | 153 604 | 3 108 | 2,02 | 1 648 |

| Primacy/Primacy_C.vhd | 104 448 | 91 207 | 87,32 | 233 394 |

| Primacy/Primacy_E.vhd | 266 240 | 90 596 | 34,03 | 25 077 |

| Primacy2016_SQL/SQL_C.vhdx | 102 404 | 93 453 | 91,26 | 369 913 |

| Primacy2016_SQL/SQL_D.vhdx | 307 204 | 230 271 | 74,96 | 18 520 |

| 2 920 512 (total MB) | 1 838 189 (total MB) | 60,91 (average) | 12 361 606 (total files) |

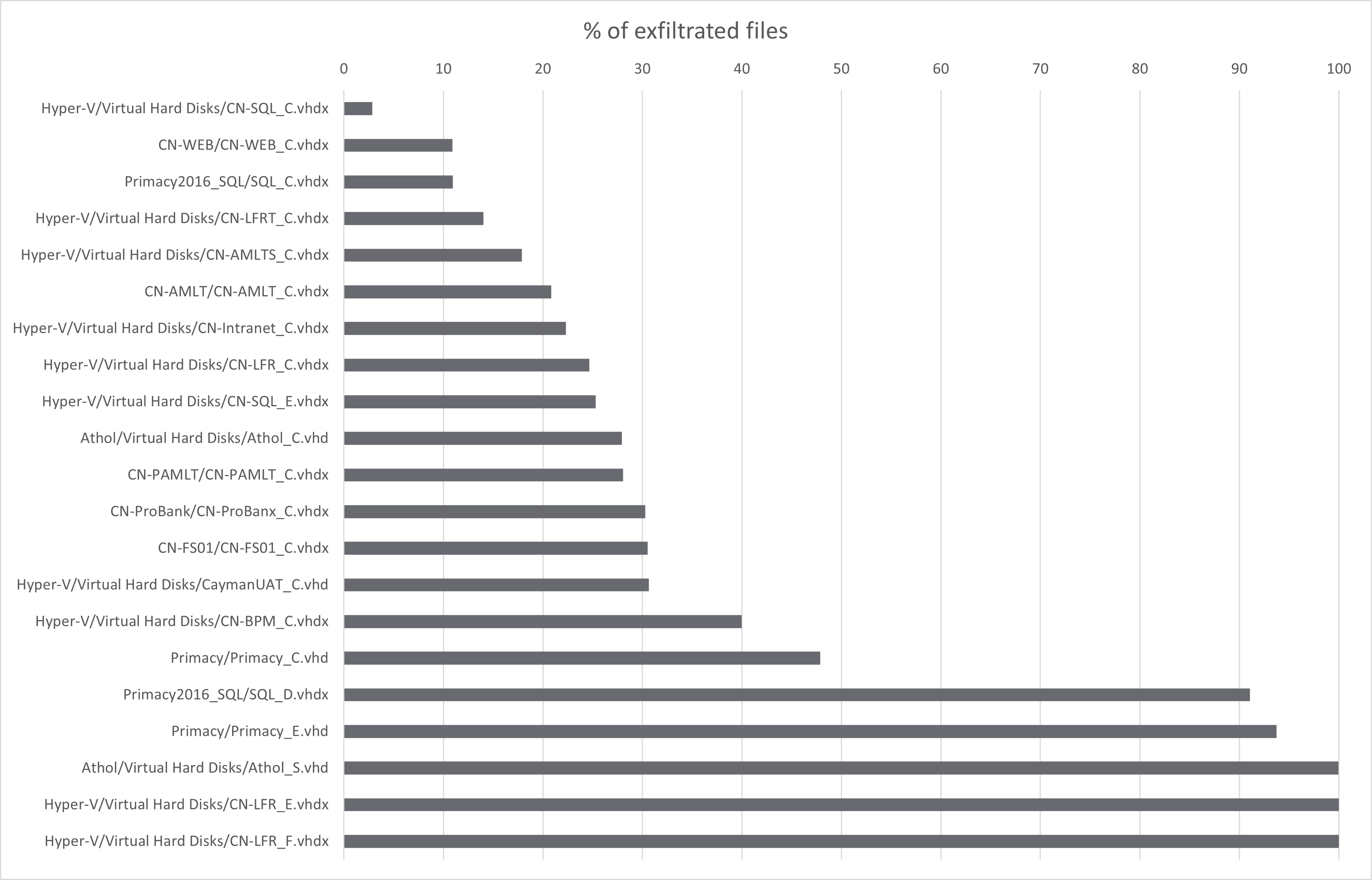

What drawn our attention, was quite high percentage of used space on several drives. This issue is shown in this diagram:

Broken files

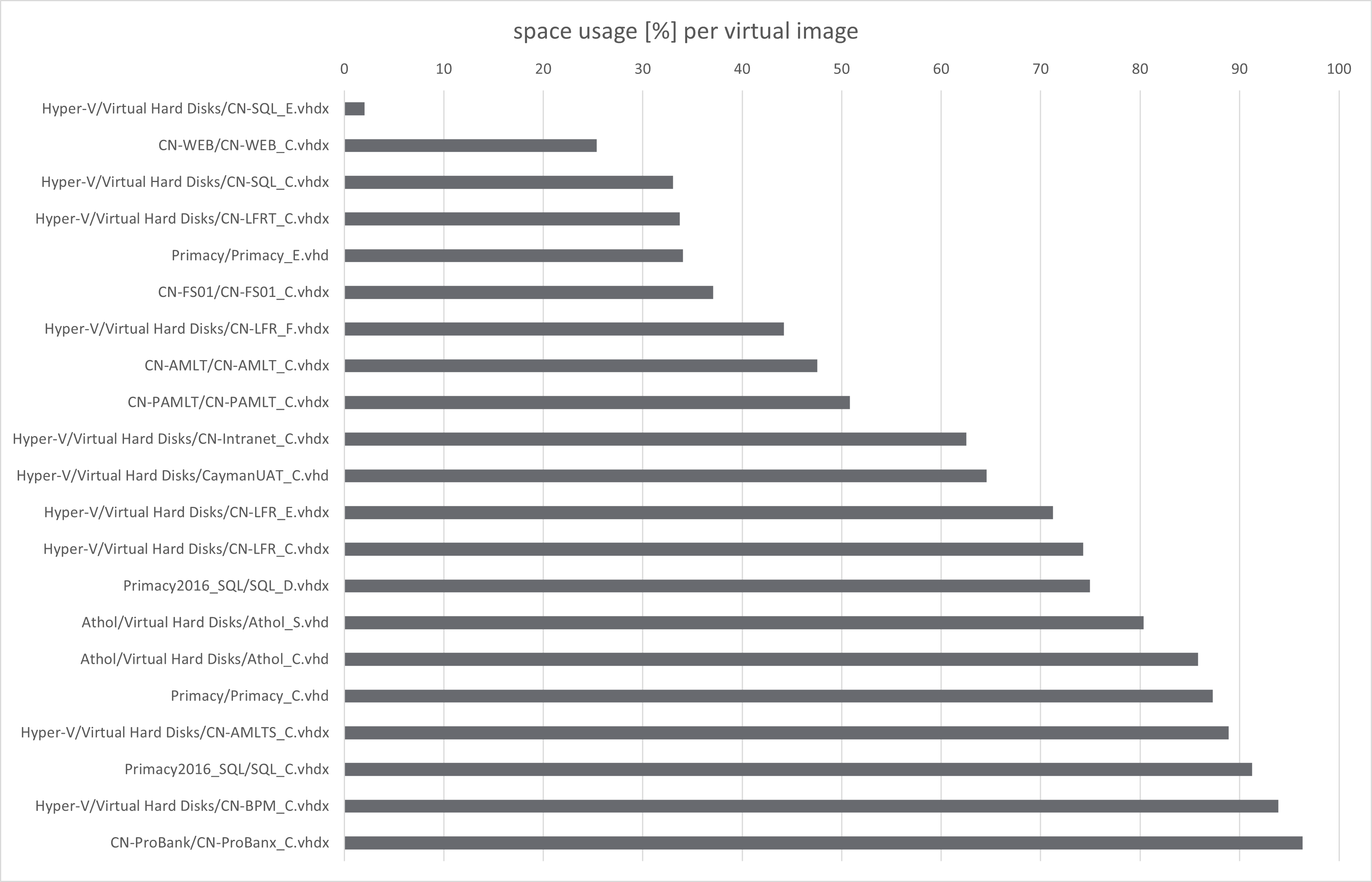

As we mentioned above, 4 virtual drive image files were broken, in 3 different ways:

CN-FS01_D.vhdximage, containing documents from internal file server, was broken in some tricky way that allowedqemuto mount it - which lead to unsuccessful exfiltration (from total 148 gigabytes, 0 files were exfiltrated, only some broken symbolic links)CN-DC1_C.vhdximage containing Active Directory domain controller main filesystem, was unreadable byqemu(and thus exfiltrated as raw file), partially recoverable manually using 7-Zip (to get a partition image, that is still broken, not mountable, but enough to continue analysis using specialized data recovery tools)- other 2 images (Exchange 2013 server) were also unreadable by

qemu(which caused their exfiltration as raw files) - but needed only replaying journal to recover data

The below diagram shows the compression ratio of these images:

From our other tests, it looks that these compression ratios are quite representative (for lz4 -1 compression method):

- for Windows and Windows Server hosts, with at least few months history of installing updates, you can expect ~40-45% ratio for main filesystem

- for fresh Windows hosts, where free space on virtual drive was not previously allocated and still contains mostly zero bytes, you can expect much better compression ratio, even 30% (but usually rather around 35%)

- VHD containers tend to have better compression ratios than VHDX - we are not sure, why (both these formats are very similar)

- dynamic size containers have worse compression ratios, than fixed size containers (however still can be smaller for fresh systems)

- containers with user data usually have worse compression ratios, than containers with Windows files, or with

Program Filesdirectories - and it always depends on type of data, and filesystem fragmentation (or, how much free space were previously allocated)

How much space is saved using Drive Badger?

One of the main advantages of Drive Badger over competing solutions, as well as scripts made ad-hoc, is the performance of the exfiltration process, understood as both speed, and reduced amount of data required to copy to target drive.

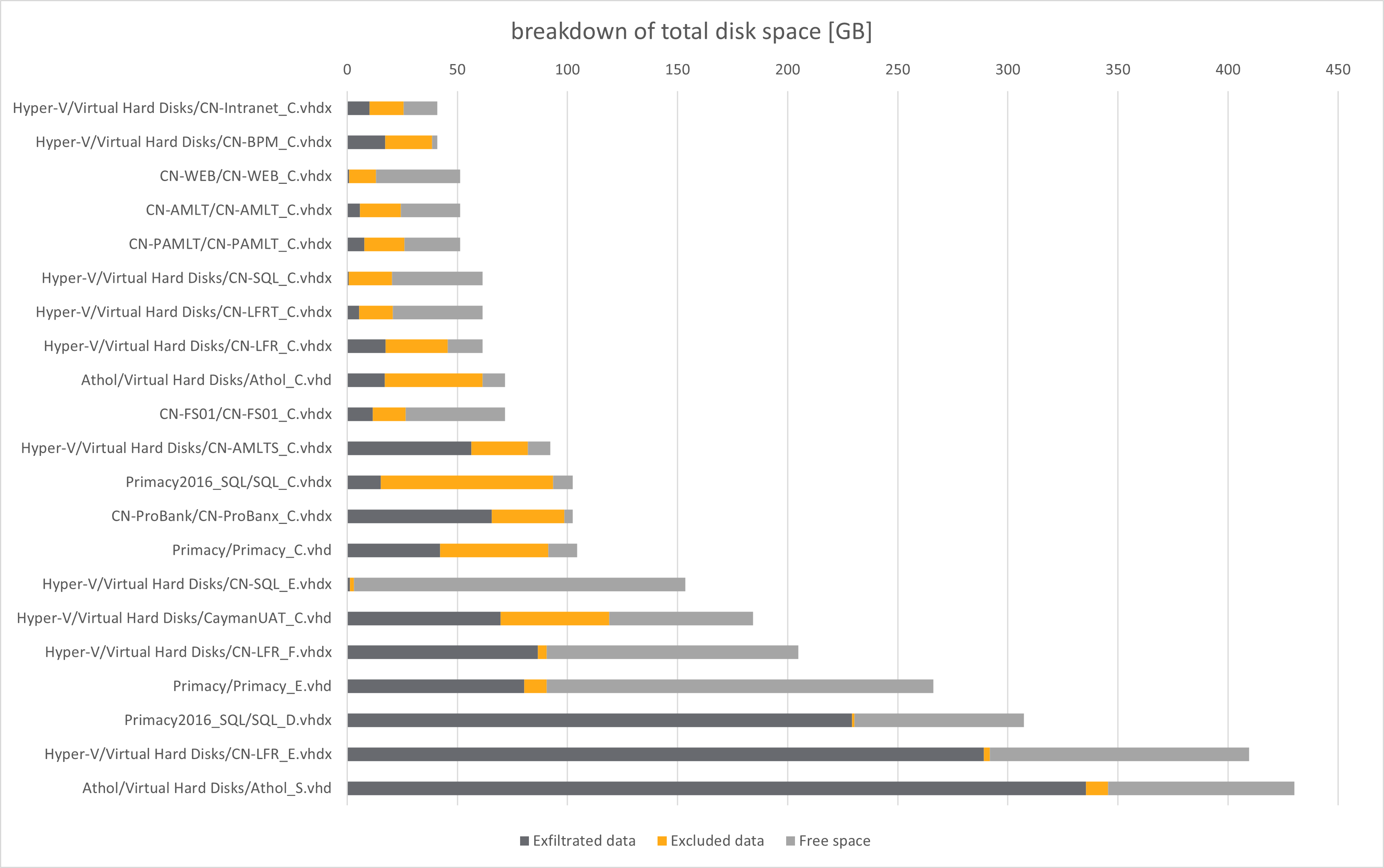

Let's now break down 2.92 TB of the original data (excluding broken containers) into:

- 1.36 TB of space required for data to be exfiltrated (46.6% of the overall containers size)

- 0.48 TB of space saved by excluding less valuable data using over 400 unique exclusion rules

- 1.08 TB of remaining free space inside original containers (which is also saved by exfiltrating only individual files, instead of whole virtual drives)

Of course, as it is shown on the diagram, the exfiltrated to excluded data ratio differs between containers.

In general, a fresh installed Windows, without any user generated files, or additional installed programs, requires less than 1 GB of space for exfiltration - since most Windows internal files, and many other groups of files repeatable between computers (eg. Adobe Reader, antivirus databases etc.), are excluded by the exclusion rules (the yellow parts on the diagram).

So, the dark grey parts of the diagram represent:

- files generated (downloaded etc.) by user(s)

- non-obvious installed software

- databases

- backup files

- logs

- everything that was too hard to find it repeatable/invaluable

Particularly in Cayman National case, most space is required for:

- Microsoft SQL Server databases (live databases and their numerous backups stored in many directories - mainly related to AML and other specialized banking software written by Primacy Corporation)

- document scans - mostly related to Laserfiche DMS, but also standalone PDF files

- Outlook PST files (and another 300+ GB of mail data in Exchange 2013 format is stored inside broken

CN-EX01_S.vhdxcontainer, which needs to be exfiltrated serapately, as raw file)

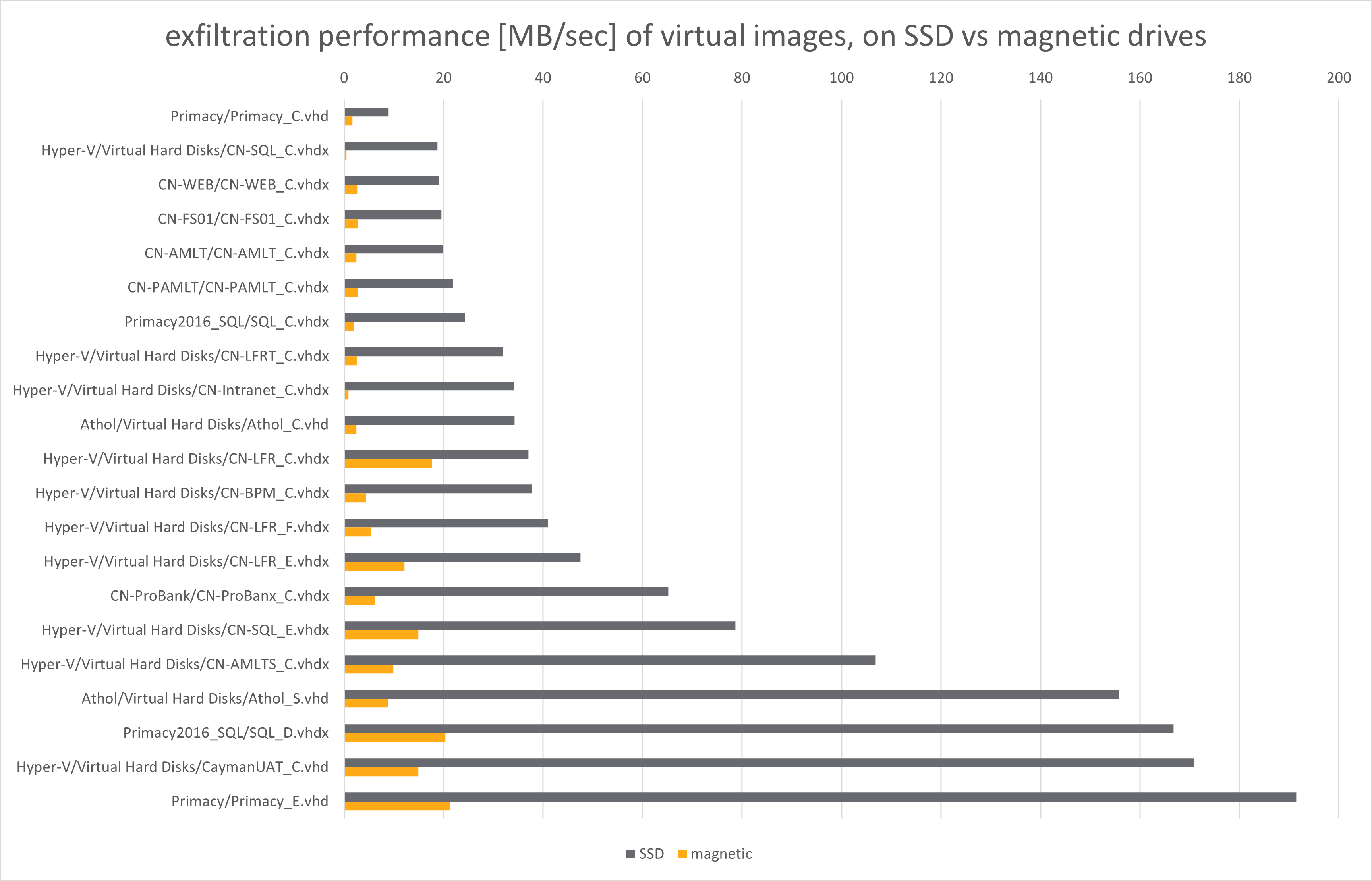

Exfiltration performance: big vs small files, SSD vs magnetic drives

From files mentioned above, Microsoft SQL Server databases and Outlook PST files are very big - typically multiple gigabytes per file. However, document scans (especially created by Laserfiche software) are very small files. On the other side, there are lots of them - in fact, 87.4% of all exfiltrated files, or 70.2% of all source files, belong to Laserfiche DMS data volumes.

How this affects exfiltration performance?

- many small files are obviously much slower to exfiltrate than big files

- complex directory structures (eg. Laserfiche DMS data volumes) also slow down the exfiltration process, comparing with the same set of files, but in flattened directory structure

On the other hand, small files can be read at once (technically in 3 system calls: stat, open and read), while bigger files may need multiple subsequent accesses. In some very specific scenarios, mainly on magnetic drives or slow iSCSI links, and VHDX containers, exfiltration of big files can be very slow, much slower than for small files. However, our experiments clearly showed that most performance peaks and drops, or other performance-affecting anomalies, are faults of qemu, which is very sensitive to problems with VMDK/VHD/VHDX containers, or underlying filesystems, especially when the underlying device has slow or saturated I/O.

In general, exfiltration performance distribution follows the distribution of file sizes within each container:

VMDK/VHD/VHDX containers are mounted using FUSE library, and underlying QEMU hypervisor. It is unfortunately not only unstable and very sensitive to many sorts of problems, but also slow. Or, more precisely, it adds huge overhead to each file/directory operation.

This is especially visible for magnetic drives (yellow bars on the above diagram), where exfiltration performance is by average 11.7x lower than for SSD drives - and can be dramatically low for main Windows partitions (eg. CN-SQL_C.vhdx or CD-Intranet_C.vhdx above).

About test hardware

All tests were performed on Kali Linux 2021.1 with kernel 5.10.13-1kali1 (amd64), on the following hardware:

- Dell Optiplex 9010 SFF

- CPU: Core i7-3770 - 8 threads, 8 MB L3 cache, 3.40GHz, Passmark score 6395 (as for April 2022), AES-NI support

- memory: 8 GB - DDR3 1600MHz

- source SSD drive 1: WD Elements SE 2TB USB 3.2 Gen. 1

- source SSD drive 2: GOODRAM HL100 1TB USB 3.2 Gen. 2

- source magnetic drive: Seagate One Touch Portable 5TB USB 3.2 Gen. 1

- target SSD drive: SanDisk Extreme Portable SSD 2TB USB 3.2 Gen.1 (SanDisk X600 inside)

Drive and encryption configuration:

- all drives were connected to front USB 3.0 ports

- source drives were formatted as single ext4 partition, without any encryption (hardware or software)

- target drive - LUKS-encrypted ext4 persistent partition (standard Drive Badger configuration + Hyper-V exfiltration support, prepared using deployment-scripts)

Availability of raw data

This spreadsheet contains all raw data regarding both source files, and exfiltration process performance.

If you want to discuss these data, or want us to clarify anything that is missing or unclear, please contact us via email in the site footer.

Legal disclaimer

-

While the original files leaked in 2019 are very easy to find, we obviously cannot provide direct links to them for legal reasons.

-

We did not process data from these leaked files for any other reason, than testing and benchmarking of Drive Badger exfiltration process itself, and preparing this case study. Particularly we were not interested in any details regarding Cayman National (or their clients') business operations.

-

We were not involved in, or affiliated with the original leak in any way. We just downloaded the files, just like anyone else can do.